Mastering Application Deployment with Docker: Techniques, Best Practices, and Advanced Strategies

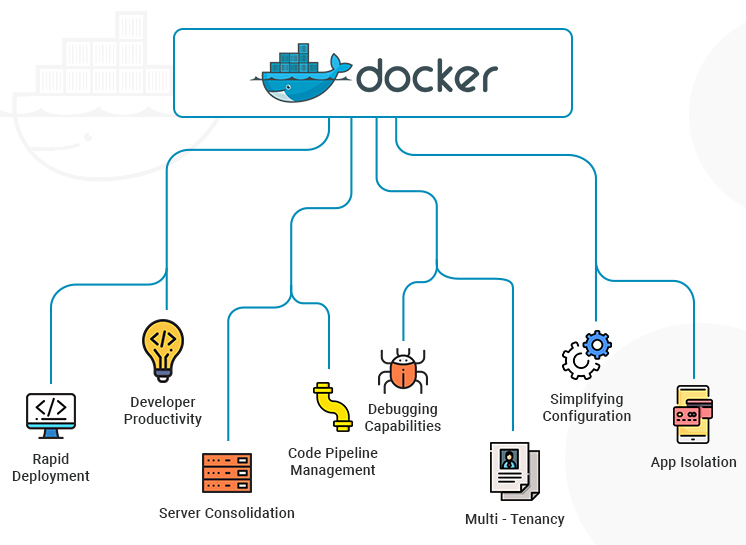

Introduction: Application deployment is a critical aspect of software development, involving the process of making software applications available for use by end-users or other systems. Docker is a powerful platform for building, packaging, and deploying applications in lightweight, portable containers. By mastering application deployment with Docker, you can streamline the deployment process, improve consistency, scalability, and reliability, and simplify the management of complex application environments. In this comprehensive guide, we’ll explore everything you need to know about deploying applications using Docker, from basic concepts to advanced techniques and best practices.

- Understanding Docker Basics: Before diving into application deployment with Docker, it’s essential to understand the basic concepts and components of Docker:

- Containers: Docker containers are lightweight, standalone, and portable units that encapsulate an application and its dependencies, including libraries, runtime environments, and configuration settings.

- Images: Docker images are read-only templates used to create containers. Images define the filesystem, runtime environment, and other parameters required to run an application in a container.

- Docker Engine: The Docker Engine is the core component of Docker responsible for building, running, and managing containers. It includes the Docker daemon, client, and other utilities.

- Dockerfile: A Dockerfile is a text file that contains instructions for building a Docker image. It defines the steps needed to create an image, such as installing dependencies, copying files, and configuring the environment.

- Creating Docker Images: The first step in deploying applications with Docker is to create Docker images containing the application code, dependencies, and configuration. You can create Docker images manually using Dockerfiles or pull pre-built images from Docker Hub, a public registry of Docker images. Here’s an example of creating a Docker image for a simple Python web application using a Dockerfile:

# Use an official Python runtime as the base image

FROM python:3.9-slim# Set the working directory in the container

WORKDIR /app

# Copy the application code into the container

COPY . .

# Install dependencies

RUN pip install -r requirements.txt

# Expose the application port

EXPOSE 5000

# Define the command to run the application

CMD ["python", "app.py"]

In this example, we define a Dockerfile that installs Python dependencies, copies the application code into the container, exposes port 5000, and defines the command to run the application.

- Building Docker Images: Once you have a Dockerfile defining the image configuration, you can build the Docker image using the docker build command. Here’s how to build the Docker image for the Python web application defined in the previous example:

docker build -t myapp .

This command builds a Docker image with the tag myapp using the Dockerfile in the current directory.

- Running Docker Containers: After building the Docker image, you can run Docker containers based on that image using the docker run command. Here’s how to run the Docker container for the Python web application:

docker run -d -p 5000:5000 myapp

This command runs a Docker container based on the myapp image, exposes port 5000 on the host machine, and runs the container in detached mode (in the background).

- Managing Docker Containers: Docker provides various commands for managing Docker containers, such as starting, stopping, inspecting, and removing containers. Here are some common Docker container management commands:

- Start a stopped container:

docker start <container_id>

- Stop a running container:

docker stop <container_id>

- List running containers:

docker ps

- Inspect container details:

docker inspect <container_id>

- Remove a container:

docker rm <container_id>

- Container Orchestration with Docker Compose: Docker Compose is a tool for defining and running multi-container Docker applications using a YAML configuration file. It allows you to define the services, networks, and volumes required by your application and start them with a single command. Here’s an example of a Docker Compose configuration file for the Python web application:

version: '3'services:

web:

build: .

ports:

- "5000:5000"

volumes:

- .:/app

This configuration defines a single service named web, builds the Docker image using the Dockerfile in the current directory, exposes port 5000, and mounts the current directory as a volume inside the container.

To start the application using Docker Compose, run the following command:

docker-compose up -d

This command starts the services defined in the Docker Compose configuration file in detached mode.

- Scaling Applications with Docker Swarm and Kubernetes: Docker Swarm and Kubernetes are container orchestration platforms that enable you to deploy, scale, and manage containerized applications in production environments. Docker Swarm is Docker’s native clustering and orchestration tool, while Kubernetes is an open-source container orchestration platform developed by Google. Both platforms provide features for automatic scaling, load balancing, service discovery, and rolling updates, making them ideal for deploying and managing large-scale containerized applications.

To deploy applications using Docker Swarm, you can initialize a Swarm cluster and deploy services using the docker swarm command. Kubernetes, on the other hand, uses YAML configuration files called manifests to define resources such as deployments, services, and pods.

- Best Practices for Docker Application Deployment: To deploy applications effectively and efficiently with Docker, consider following these best practices:

- Use lightweight base images: Choose base images that are small in size and optimized for your application’s requirements to reduce image size and improve startup time.

- Minimize layers: Minimize the number of layers in your Docker images to reduce build time and image size. Combine multiple RUN commands into a single command and use multi-stage builds to separate build dependencies from runtime dependencies.

- Implement health checks: Define health checks in your Docker containers to monitor the health of your application and automatically restart containers that are unhealthy or unresponsive.

- Use environment variables for configuration: Avoid hardcoding configuration values in your Docker images by using environment variables. This allows you to configure your application dynamically at runtime without modifying the image.

- Secure your containers: Follow security best practices, such as regularly updating base images, scanning images for vulnerabilities, and restricting container privileges to minimize security risks.

- Monitor and log containerized applications: Use monitoring and logging tools to monitor the performance, availability, and resource utilization of your containerized applications and troubleshoot issues effectively.

- Automate deployment workflows: Implement automated deployment pipelines using CI/CD (Continuous Integration/Continuous Deployment) tools to streamline the deployment process, reduce human error, and ensure consistency across environments.

- Conclusion: In conclusion, mastering application deployment with Docker is essential for building, packaging, and deploying applications in a portable and scalable manner. By understanding Docker basics, creating Docker images, running Docker containers, managing containers with Docker Compose, and leveraging container orchestration platforms like Docker Swarm and Kubernetes, you can deploy applications effectively and efficiently in any environment. So dive into Docker application deployment, practice these techniques, and unlock the full potential of Docker for building and deploying modern applications in a containerized world.